We developed a new method for creating humor detection datasets by generating non-jokes from jokes. We found that our RobBERT Dutch language model outperforms previous neural models like LSTMs and CNNs. In doing so, we created the very first Dutch humor detection systems.

Table of Contents

New type of joke dataset

Many previous humor detection systems have learned to distinguish jokes from completely different types of text, e.g., news and proverbs. Given that jokes are fragile, changing only a handful of important words completely destroys the funniness of the joke.

In the TorfsBot Twitterbot, we already developed an algorithm (called "Dynamic Templates") for imitating text and turning it into a more nonsensical version of the original. We used this algorithm to automatically break jokes by replacing words with words from other jokes.

E.g., for the joke

Two fish are in a tank. Says one to the other: "Do you know how to drive this thing?"

the funniness disappears if "tank" or "fish" or "drive" is replaced by almost any other word with the same part-of-speech. Doing so turns the joke into an absurd sentence.

For example, this algorithm could replace either of the important words of the previous joke with "bar" and/or "men" and/or "drink", which might all occur in another joke used as context for the generated (non-)joke.

Two men are in a bar. Says one to the other: "Do you know how to drink this thing?"

Generated non-joke examples

Here are some examples of (Dutch) non-jokes generated by this approach:

Het is groen en het is een mummie, Kermit de Waterkant

"Ober, kunt u die schrik uit mijn politieman halen? Want ik eet liever alleen."

Hoe heet de vrouw van Sinterklaas, Keukentafel.

Wat is het toppunt van principe? 1) Wachten totdat een Nederlander gaat twijfele 2) Een Zuster met een autoladder 3) Een brandwacht brandmeester met een brandmeester van 9 maanden

Er loopt een super vriendelijk blondje langs een armband. Last er een toonbank: “zo, waargaan die mooie mannen heen?” Blondje: “naar de barkeeper als er niets tussen komt…”

Wat staat er midden in het bos? De kapper.

Training neural models

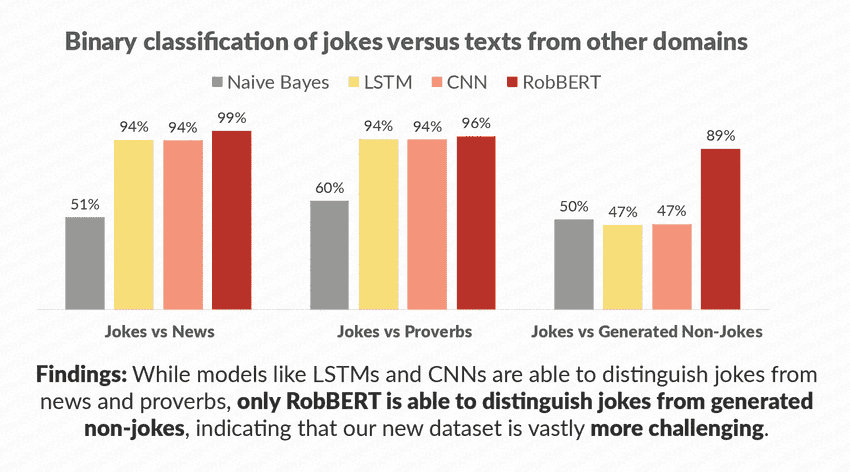

We used this "jokes versus generated jokes" dataset to train several neural networks. Similar to what other researchers showed, we also found that neural networks perform well in distinguishing jokes from news and proverbs.

However, as we suspected, CNN and LSTM models completely fail to distinguish jokes from generated jokes. Our RobBERT model can distinguish jokes from these generated jokes ~90% of the time. This shows that such transformer models might have more of a grasp on humor or at least on spotting some form of coherency.

More information

Presentation

This research also won the Best Video Award for the best presentation at the BNAIC/Benelearn2020 conference. You can watch the video explaining this research below:

Paper & Code

You can read more details in our paper, and find more information about how to use this model yourself on our GitHub repository.