Improbotics is an improv theatre production in which an artificial intelligence performs improvised scenes with the other actors.

Table of Contents

Show

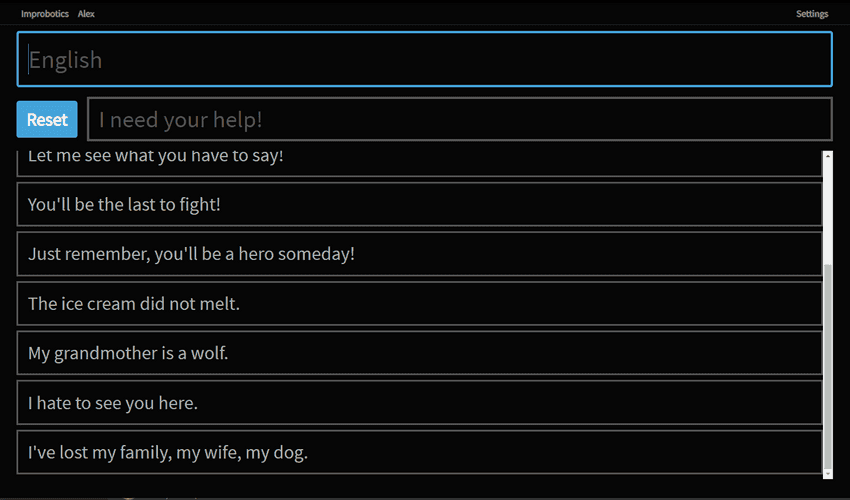

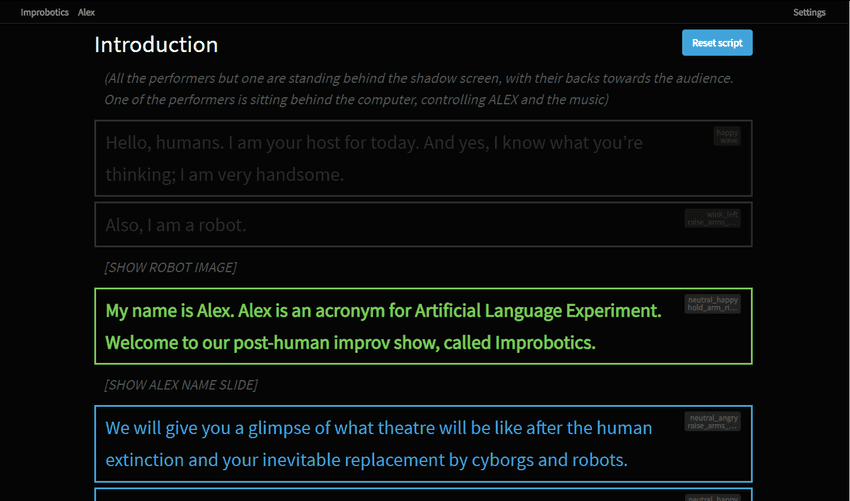

A little robot called Alex presents the show alongside a human presenter. Alex quickly admits that they want to play along and are allowed to perform a scene with a human companion. Alex's operator types what the human says into the interface so that Alex can generate appropriate responses to the scene using the GPT-2 language model.

Because their robot body is limited, Alex is granted a human body later in the show. This human-cyborg is only allowed to say the generated sentences whispered by Alex through their earpiece. At the show's end, all human performers carry an earpiece, and the audience has to guess who was controlled by the AI.

More information about our show can be found on ERLNMYR website.

International History

The concept was originally worked out by Piotr Mirowski and Kory Mathewson and later got adapted into different versions in the Improbotics UK, Canada, Sweden, and Flanders.

Creating the Dutch AI

One of my contributions to the project was developing methods for playing this traditionally English show in Dutch. This meant that we had to find a way to create a general Dutch language model, preferably as similar to the GPT-2 model as possible. Given that no Dutch GPT-2 model existed at the time, and the project did not have the enormous budget required to train a Dutch GPT-2 model from scratch, we used a translation service on top of the default GPT-2, giving astonishingly great results.

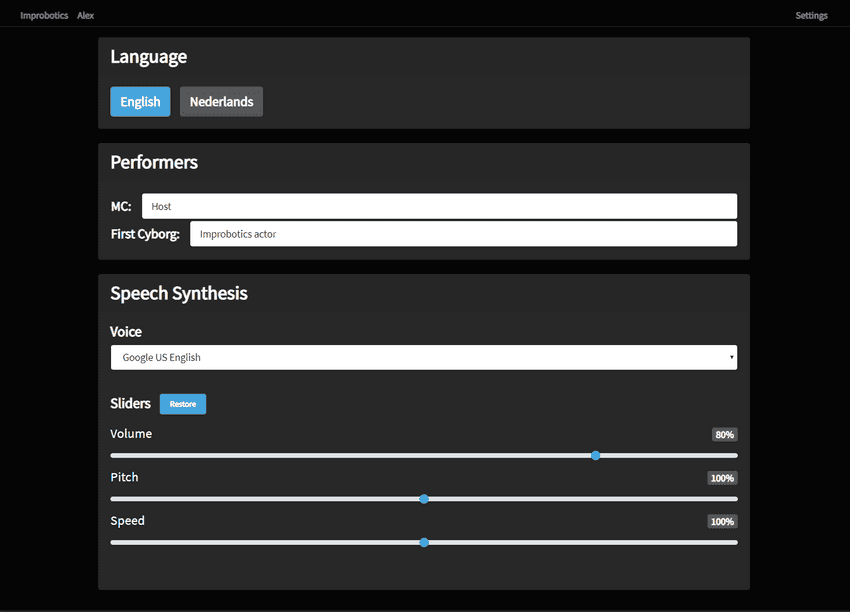

Due to Improbotics Flanders being a larger production than its international counterparts, we also required a wholly new and stable interface for our needs. I built an interface from scratch that could control the show's scripted presenter parts, connect with the GPT-2 model in Dutch and move the robot. Since Improbotics Flanders plays in multiple languages, it also allows to easily change the language, adjusting the translation component and the presenter's script. The new interface also aimed to be more intuitively controllable by people from a less technological and more artistic background.

Controlling the robot

Due to technical limitations of the existing robot controller with the technical setup of the show, we had to develop a new way of moving the robot and its eyes. Together with Sebastiaan "DrSkunk" Jansen, we reverse-engineered the robot's protocol and created a new way to send eye and body commands to the robot. In our new interface, we also linked the action commands to the lines in the presentation script. Also, we linked random movements to the generated responses.

Our show received lots of lovely and positive reactions from the press (see below) as well as from the audience.